Introducing the Public Sector AI / Emerging Technologies Maturity Model (v1)

Introducing the Public Sector AI / Emerging Technologies Maturity Model (v1)

Artificial Intelligence is no longer a future concept for government, it’s here, being deployed in eligibility systems, health and human services, public safety, and administrative operations. But as agencies explore new use cases, one fundamental question keeps surfacing:

How can we assess where a use case belongs on the maturity curve, balancing innovation, efficiency, ethics, and human impact?

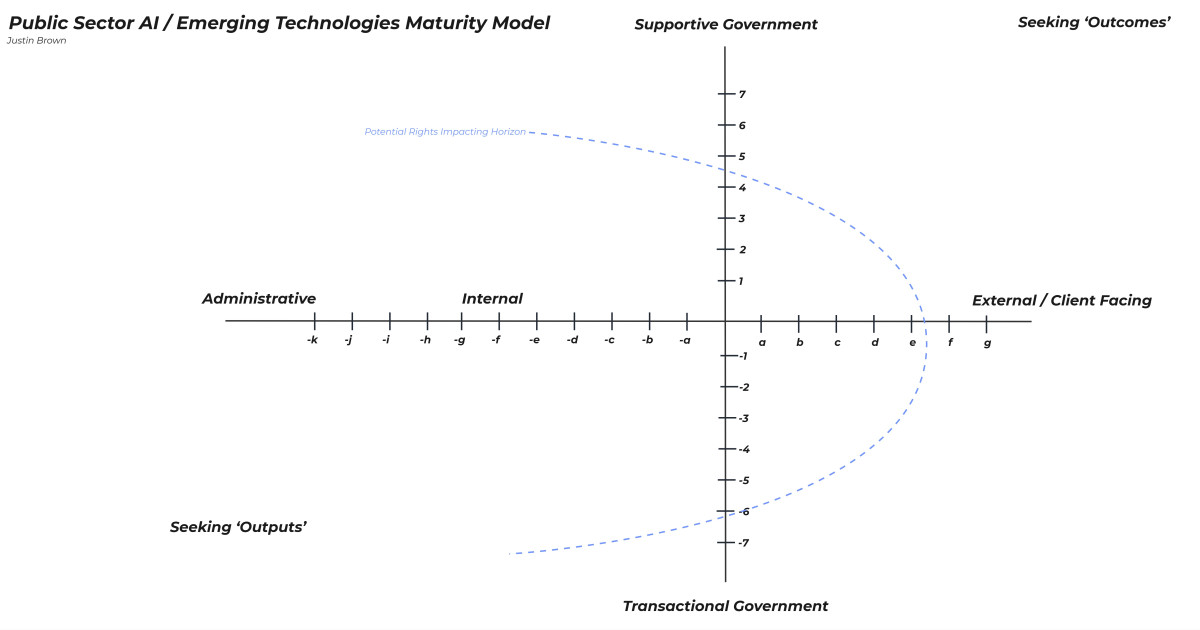

That question led me to begin sketching out an early framework, the Public Sector AI / Emerging Technologies Maturity Model (v1), as a way to visualize how government leaders might locate their technology initiatives across a shared landscape of purpose, risk, and maturity.

Mapping the Public Sector AI Landscape

This model organizes public-sector AI initiatives along two intersecting dimensions:

Horizontal Axis: Administrative → Internal → External / Client Facing

This reflects how close the system is to direct service delivery and human impact.Vertical Axis: "Transactional Government” → "Supportive Government”

This measures intends to identify whether the implementation supports government services for the basic transactions of government (think about providing a license to operate a beauty salon) or whether the initiative is within the deep and meaningful spaces in which citizens need a 'relationship' with government (think about child welfare, mental health, criminal justice, etc).

Each use case can then be plotted as a point on the map, offering a visual way to compare its maturity, risk, and return profile.

Circle Size represents scale of implementation.

Circle Color represents projected five-year budget impact (from cost-savings in green to cost burdens in red).

Outputs vs. Outcomes: It is important to point out that as projects move from bottom left quadrant to top right quadrant, they generally move from an attempt at achieving outputs to achieving real world outcomes.

Understanding the Rights-Impacting Horizon

The dashed curve represents the Potential Rights-Impacting Horizon. This boundary signifies the point where AI initiatives may begin to intersect with issues of human rights, privacy and security. Projects that cross or approach this horizon require greater ethical oversight, transparent governance, and human monitoring. This doesn't mean that projects that exist at or beyond the rights impacting horizon should not proceed. It does mean that intentional focus is recommended to ensure that proper protections are available.

Within the public sector, this means evaluating not only technical performance but also social impact, consent, fairness, and other risk mitigation. The horizon serves as a visual reminder that as use cases move outward toward citizen-facing applications, the stakes rise.

Agencies can use this horizon as a tool for risk stratification, helping determine when to add ethics reviews, human-centered design validation, or community engagement before implementation.

A Framework for Practical Decision-Making

By positioning AI use cases within this structure, agencies can start to ask the right questions:

Where is this initiative today, and where do we want it to be?

How does its current placement inform our governance, communications, or workforce and cultural readiness?

What guardrails do we need as we move from internal automation to client-facing outcomes?

It’s not about labeling technologies as “good” or “bad.”

It’s about creating a shared language for evaluating risk, return, and readiness, across a portfolio of innovation.

A Living Model and a Community Conversation

This is version 1 (v1) of the model. Like the technologies it describes, it’s designed to evolve.

I’d love to hear your feedback and experiences:

What dimensions or variables do you believe are missing?

How might your agency or organization apply a model like this to guide ethical AI implementation?

Where would your current or planned AI projects fall on this map?

You can see the visual version below, and I’ll continue refining this framework as I receive input from practitioners, policymakers, and researchers.

Justin Brown

Founder, Global True North

Exploring human-centered systems transformation, technology, and culture in government